How to scrape a table from a website with Python

Getting information is always the starting point for data journalism. Sometimes, the information we want lives on web pages we come across and Python is a great tool to get it so we can analyze and filter it. This tutorial, based on “Web scraping with Python” by Cody Winchester from the Investigative Reporters and Editors conference June 24, 2023, will take a simple table on a web page and turn it into a .CSV file to get you warmed up for web scraping with Python. Follow Winchester on GitHub for more information.

Here’s a checklist before we start:

- Make sure Python is installed on your computer. If not, Installing Python The IRE Way is an easy-to-follow guide.

- Install the packages: “requests,” “beautiful soup 4” and “Jupyther notebook.” You can run “pip install requests jupyter bs4” in your Terminal app (Mac) or the Command Prompt program (Windows).

Here is the website where we’ll find the table we want to scrape. The table has two rows of header and three columns. Let’s say we want to scrape all of it.

First, open Jupyter Notebook and start a new file. You can simply type jupyter notebook in Terminal (Mac) or Command Prompt (Windows) and click the “New” button on the right-up corner, then choose “Python 3.”

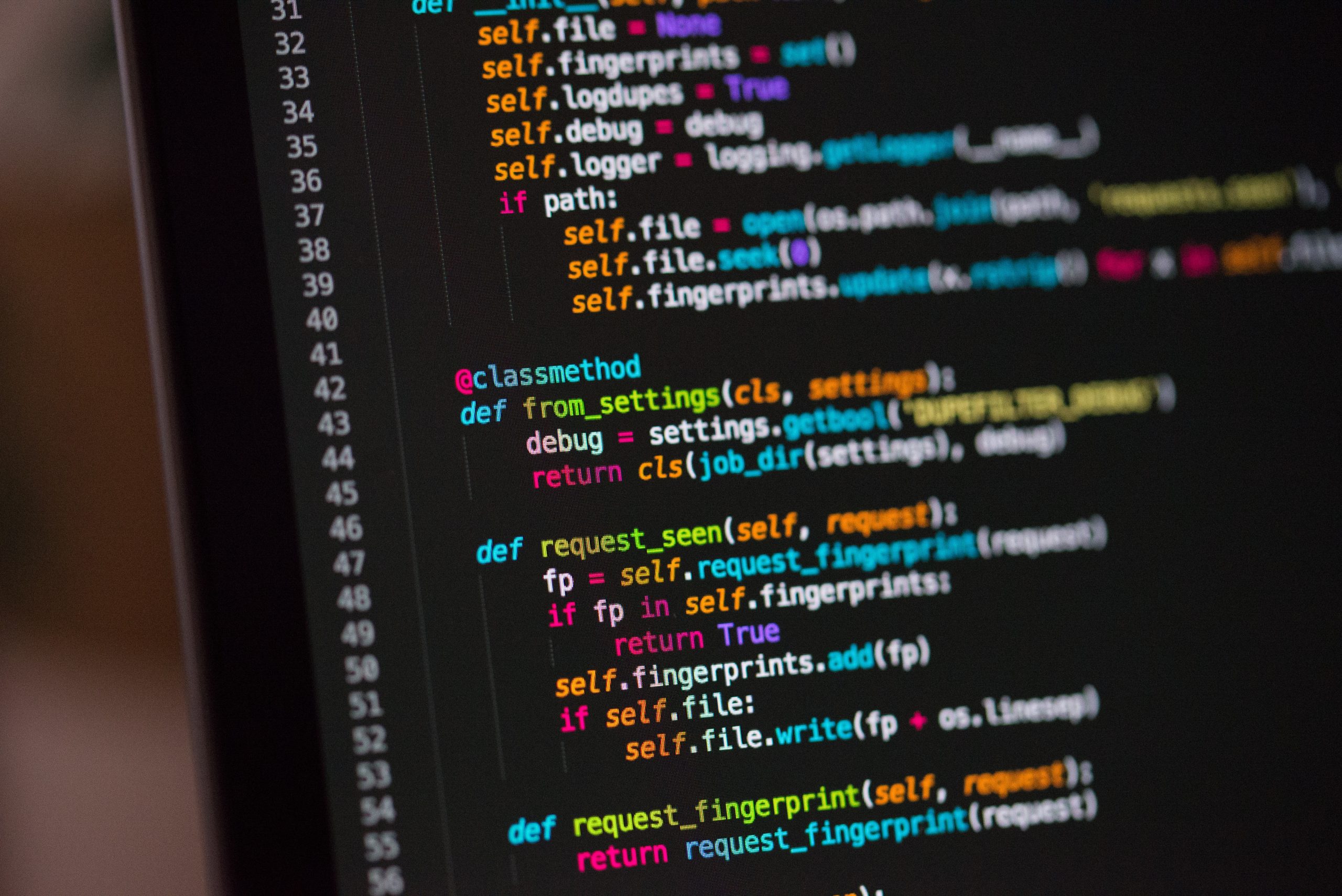

In the notebook, use the code that follows to import library “requests” and “beautiful soup 4” so we can use them later.

import requests

import bs4Create a variable to store the URL to the website. We use “=” to assign things to new variables.

URL = 'https://www.topuniversities.com/student-info/choosing-university/worlds-top-100-universities'Use get() from “requests” library to access all elements from the website.

req = requests.get(URL)To check if we can make the request successfully, we can use the code below. If the feedback is “200” it means the request was successful and we can go ahead. If other results come up, we can use this site to check the meaning of the code.

req.status_codeNow, we have a pile of HTML text which looks very messy. You can check by running req.text

We will use beautiful soup to organize the HTML into a data structure that is workable for Python.

soup = bs4.BeautifulSoup(req.text, 'html.parser')You can try and run ‘soup’ to see if the text is well-structured.

Use find_all() to grab the elements that apply to the criteria we set. In this case, we need to grab the table first. Number 0 here means to get the first table on this web page. In Python, the first element is numbered 0.

In HTML,

- <table> means this block is a table.

- <tr> means table row.

- <td> means table data.

- <td> wrapped in <tr> wrapped in <table>

In Python, counting starts with 0, which means 0 means the first element, 1 means the second and so on.

table = soup.find_all('table')[0]Then, we can grab all the table rows in this table.

rows = table.find_all('tr')We can store the table data we collect into different variables. Because the table on the web page has two rows of header, the data we want to scrape starts from the third row, we use [2:].

rank = cells[0].text.strip() means it will store every first cell’s text from the rows we picked. strip() can trim off external whitespace for each value.

Using print here, we can see the data we get.

for row in rows[2:]:

cells = row.find_all('td')

rank = cells[0].text.strip()

college_name = cells[1].text.strip()

location = cells[2].text.strip()

print(rank, college_name, location)

print()Import CSV to export the data.

import csvWrite the header for this CSV file.

HEADERS = [

'Rank',

'College_Name',

'Location',

]For the last step to export the CSV file:

- Name it “top-100-university.csv”

- Use write mode, “w”

- Specify that new lines are terminated by an empty string.

encoding='utf-8'can avoid errors from things like invalid bytes.

For the next commands, write the headers first and copy the code that accesses the table data from above. Instead of printing it out, use writer.writerow() to write it to the file.

with open('top-100-university.csv', 'w', newline='',encoding='utf-8') as outfile:

writer = csv.writer(outfile)

writer.writerow(HEADERS)

for row in rows[2:]:

cells = row.find_all('td')

rank = cells[0].text.strip()

college_name = cells[1].text.strip()

location = cells[2].text.strip()

data_out = [rank, college_name, location]

writer.writerow(data_out)By running the code above, you can see a new CSV file in your folder that contains the table data we just scraped.

Congratulations, you have taken a step into the castle of web scraping with Python and are ready to explore more! Try it with data you want to use.

- How to scrape a table from a website with Python - November 7, 2023