Covering TikTok’s algorithm: How one tech reporter demystified a TikTok trend

Frances Haugen, a former data scientist at Facebook, recently revealed several allegations against the social media giant at a U.S. Senate subcommittee. In gripping testimony, she described how Facebook and Instagram harm young people, purposefully sowing radical divisions and amplifying misinformation in favor of profit.

But perhaps the most popular social media company is the one that is least regulated: TikTok. The video-sharing app that gained widespread popularity throughout the COVID-19 pandemic operates on an algorithmically-driven feed that can be notorious for populating extremist content. The app has long been owned by ByteDance, a Chinese tech company.

So, how can tech reporters cover TikTok when so much about the app and its algorithm remains unknown? Kari Paul, a tech reporter for The Guardian, dives deeper into the algorithm and provides insights on the concerning patterns that impact teens every day.

On her personal TikTok account, Paul started noticing a popular hashtag continuously popping up, #whatieatinaday. Videos categorized under the hashtag depicted usually young teens showing off what they ate in a day, which sometimes looked like a concerning amount of little food.

Paul started to rethink Haugen’s recent comments — how sites like Facebook and Instagram were especially harmful to young girls already struggling with body image issues. So, Paul created a burner TikTok account, a new account where she could reset her algorithm and truly dive into this seemingly popular facet of TikTok.

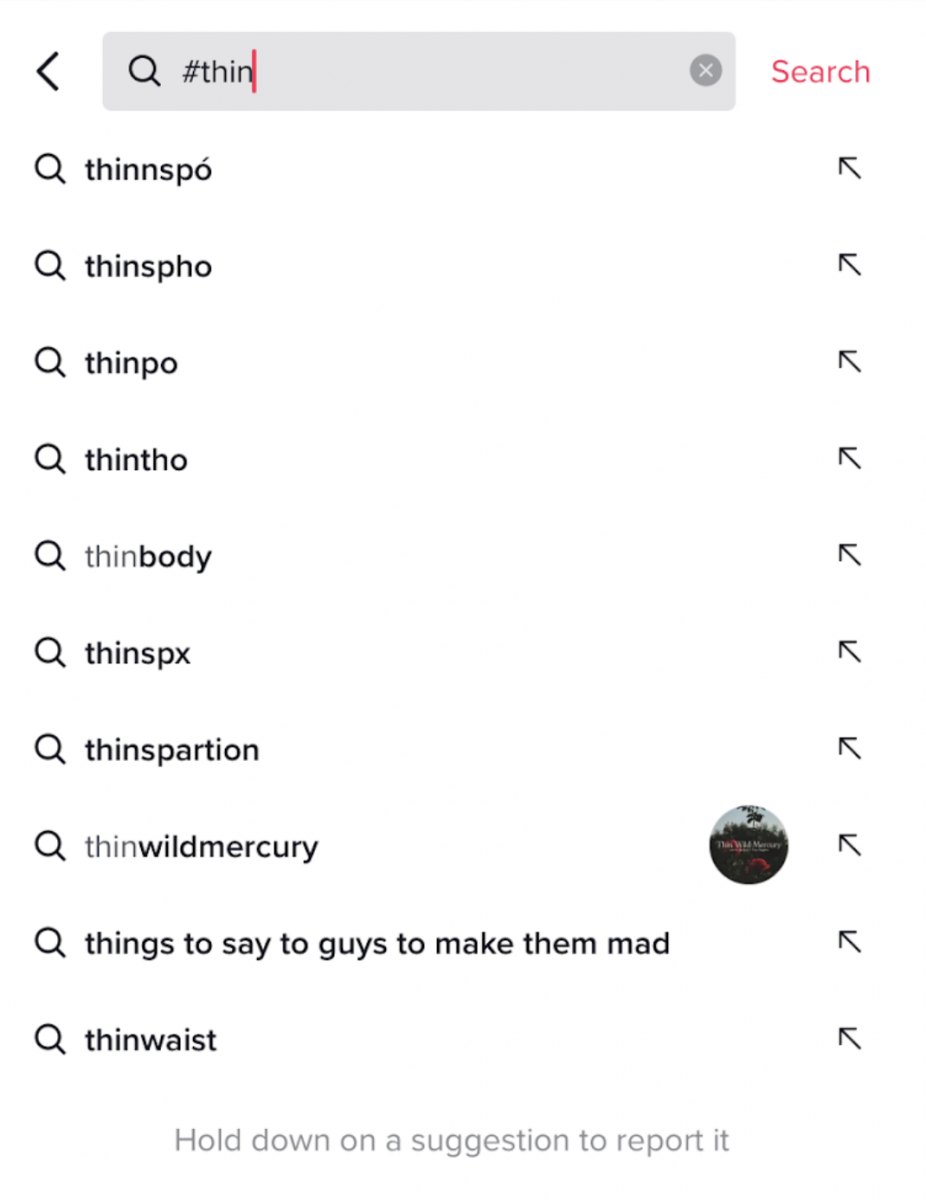

When Paul interacted with #whatieatinaday videos, liking them or watching the entire video all the way through, her feed started to change. First, videos promoting restrictive diets or intensive workouts started showing up. She started clicking on the hashtags in the captions of the videos. “Someone would post #paleo or #keto and I would click that and then that would go to hashtags like weight loss,” she said. “And weight loss obviously easily led to all sorts of other stuff, and then somehow I was suddenly on eating disorder TikTok.”

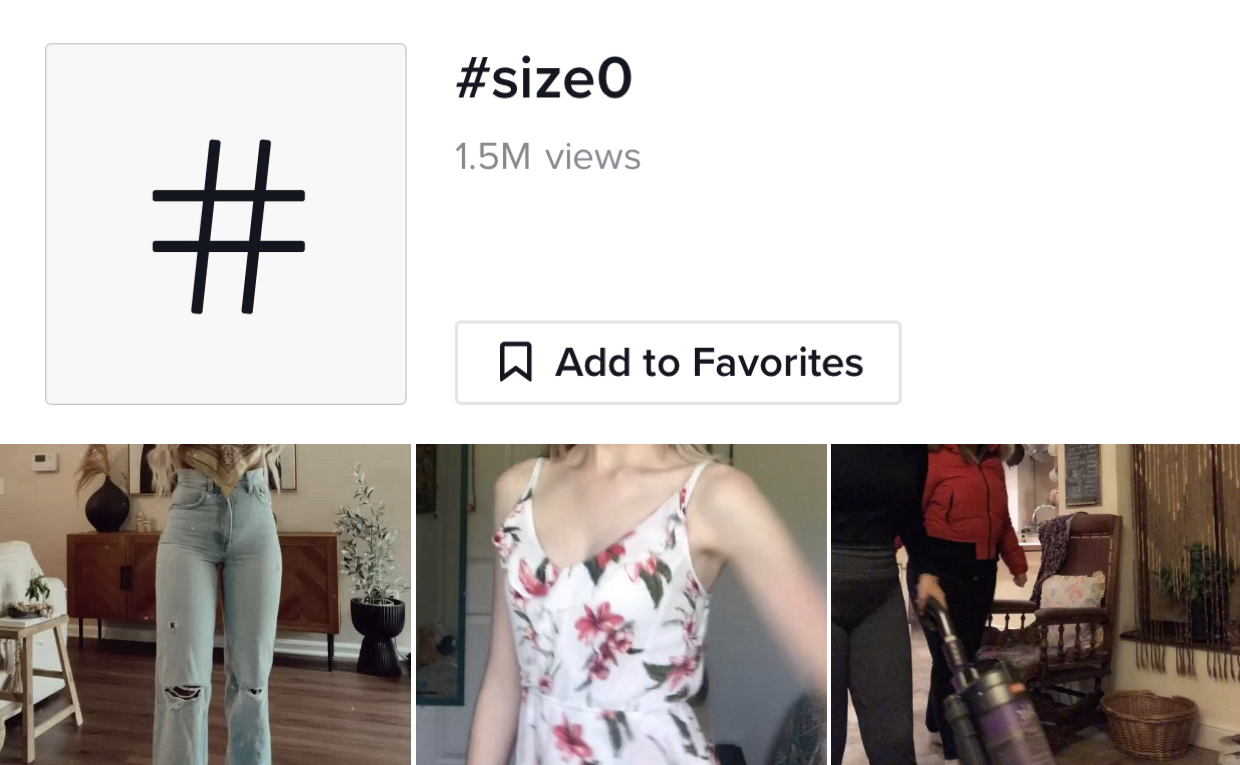

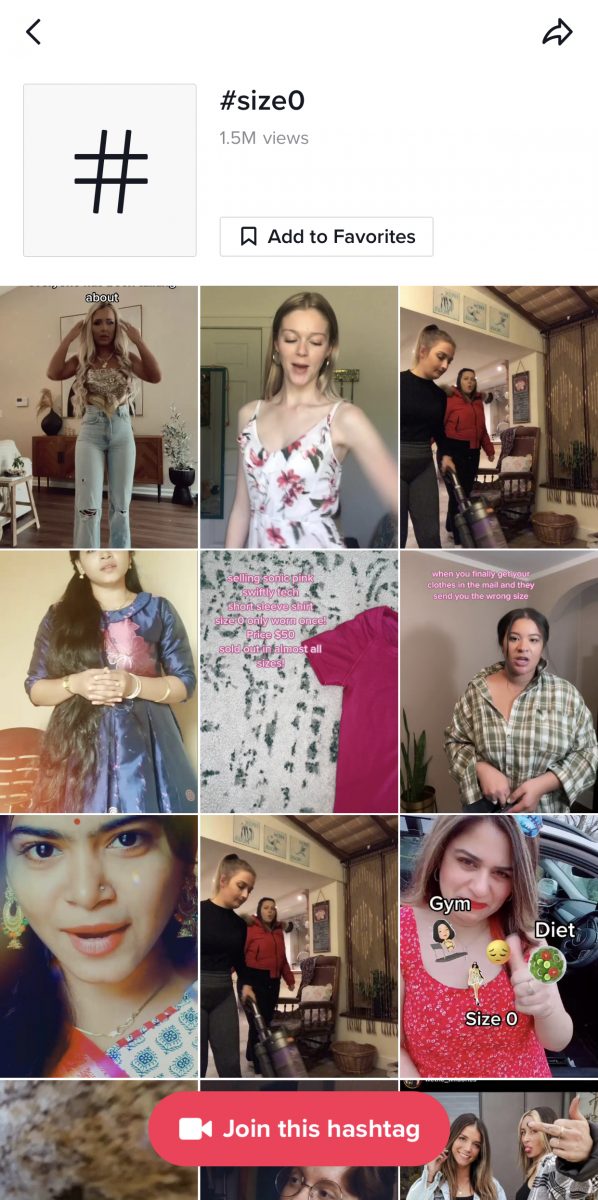

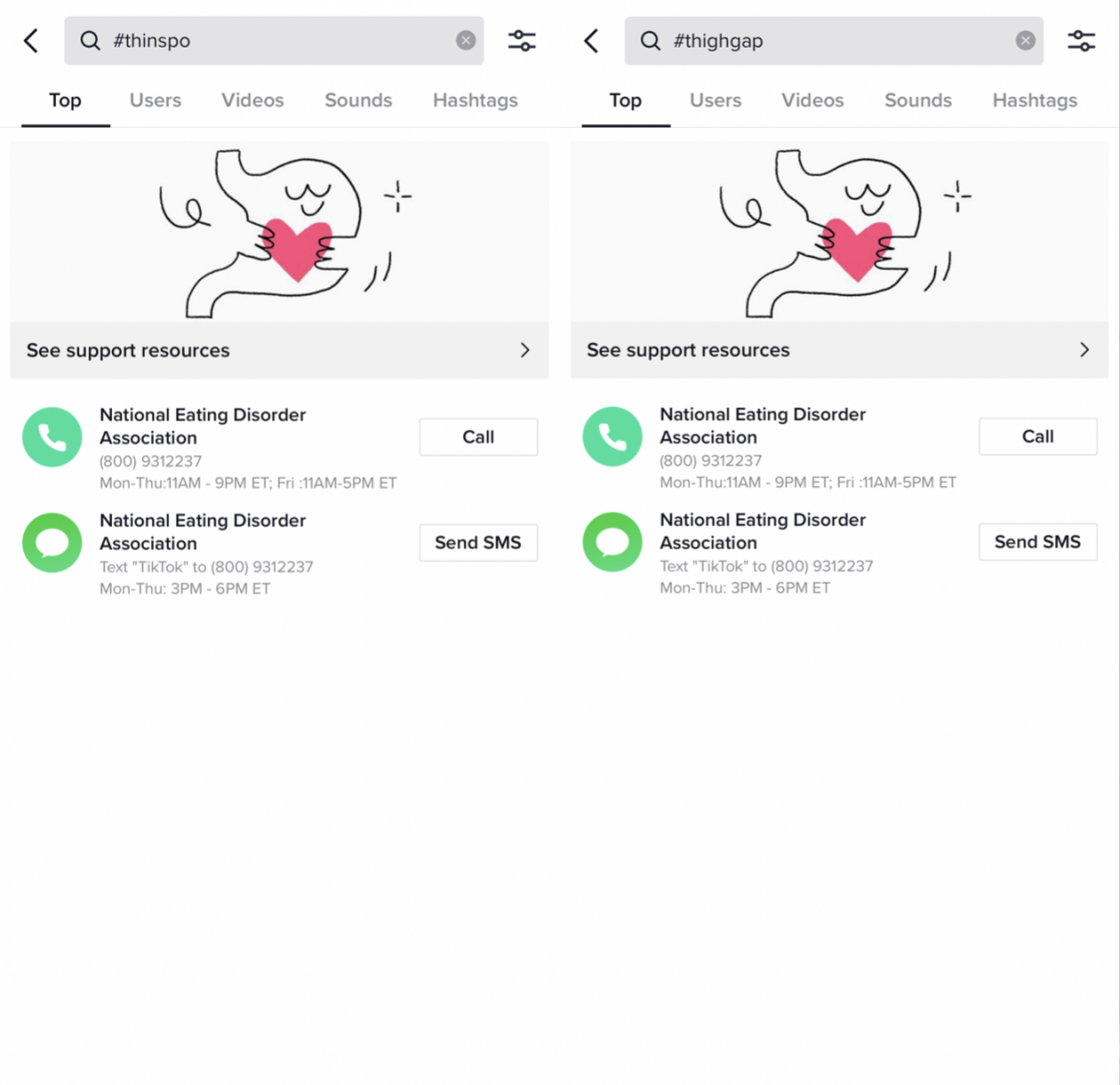

Paul found that at least two dozen pro-anorexia hashtags remain searchable on TikTok. Hashtags that have been banned from Instagram were found on TikTok; hashtags like “skinnycheck” had over 1 million views, “size0” had 1.4 million views and “thighgapworkout” had 2.6 million views.

To investigate more, she joined several Facebook groups that included concerned parents of children with eating disorders. They were increasingly worried about social media channels like TikTok that they couldn’t personally regulate. Paul also connected with a 17-year-old who has had an eating disorder since she was 15 and avoids TikTok because it often triggers her disorder.

She told Paul, “people use ‘#whatieatinaday’ to brag about their diet, and it is often not even enough food for a toddler. It is ruining the idea of what it means to be healthy for people my age.”

The content Paul uncovered is not only unregulated by TikTok, it can be populated without users even searching for it. That’s how TikTok’s algorithm works: when users interact with a video with specific hashtags, the algorithm takes these interactions into account, and populates videos the algorithm — which isn’t human-driven — deems similar.

TikTok’s algorithm tends to promote extremist content at a rapid pace, according to a March 2021 study conducted by Media Matters, a left-leaning website. The study found that videos promoting far-right groups like QAnon, Patriot Party and Oath Keeper were often pushed by TikTok, keeping users in a bubble that continually points to more far-right misinformation.

Paul said part of the reason TikTok is so unregulated compared to other social media platforms is because the company isn’t based in the U.S.

“We know even less about it and its algorithm than we do about Facebook and Instagram and Twitter, so I think that is troubling on its own,” Paul said. “I have never seen something that is so responsive to your previously viewed content. TikTok seems to be very hyper-curated.”

Especially after Frances Haugen’s testimony in front of a Senate subcommittee in early October, bills are being proposed in Congress with the goal of regulating social media. One bill bans specific aspects of a site for users under age 16, like automatic video-play, “like” buttons and follower counts. Another prohibits the collection of personal data for users between 13 and 15 years old without their consent.

But even if these bills were to pass, they wouldn’t necessarily impact TikTok since ByteDance, the app’s parent company, is primarily based in China.

Thus, covering TikTok has emerged as a challenge for tech reporters like Paul. Besides connecting with actual users, Paul advised, journalists should be very careful while documenting the findings in the app. She took screenshots of every new page, new videos and hashtags that populated from previous clicks in order to map the rabbit-hole effects of TikTok.

Haugen, in her testimony, recommended that social media sites go back to a chronological feed, instead of an algorithmically-driven one. While Facebook and Instagram both previously operated on a chronological system, TikTok never did. Its popularity, after all, is partially due to its algorithm, to a system that populates videos to users who don’t even know what they want to see.

“As a consumer, I enjoy algorithmically driven content. I love that my feed is relevant to me,” Paul said. “But unfortunately, we live under capitalism and any time something is tailored to a user experience involving advertising, it’s going to end up being toxic because they’re trying to drive engagement as much as possible to make more money.”

Since The Guardian published Paul’s investigation, TikTok has removed eight of the hashtags Paul found. But, new ones are constantly popping up, often variations on old hashtags. Users will always find ways to create content — but the responsibility falls on TikTok to moderate it responsibly, Paul said.

“I don’t want to say that users have no responsibility, but I’m just not concerned with their responsibility because they’re not a multimillion dollar company,” Paul said. “The onus is on the company to spend some of their millions of dollars on moderating content that’s harmful to people, and the onus is also on regulators to do more to help this issue. It’s just so unregulated right now.”

- Covering TikTok’s algorithm: How one tech reporter demystified a TikTok trend - December 13, 2021

- “There is only so much data can tell us” - February 11, 2019