Facebook continues to run political ads with misinformation

Facebook claims it has enacted measures to stem the spread of misinformation on its platform since the 2016 election. But the platform seems to be applying a different set of standards to politicians.

Earlier this month, Elizabeth Warren’s campaign released an ad on Facebook falsely claiming that Mark Zuckerberg had endorsed Donald Trump for re-election – an intentional lie meant to challenge Facebook’s advertisement policies. Warren claims President Donald Trump has used these loopholes to his advantage. Warren later stated that the information was untrue and linked to a petition to break up big tech.

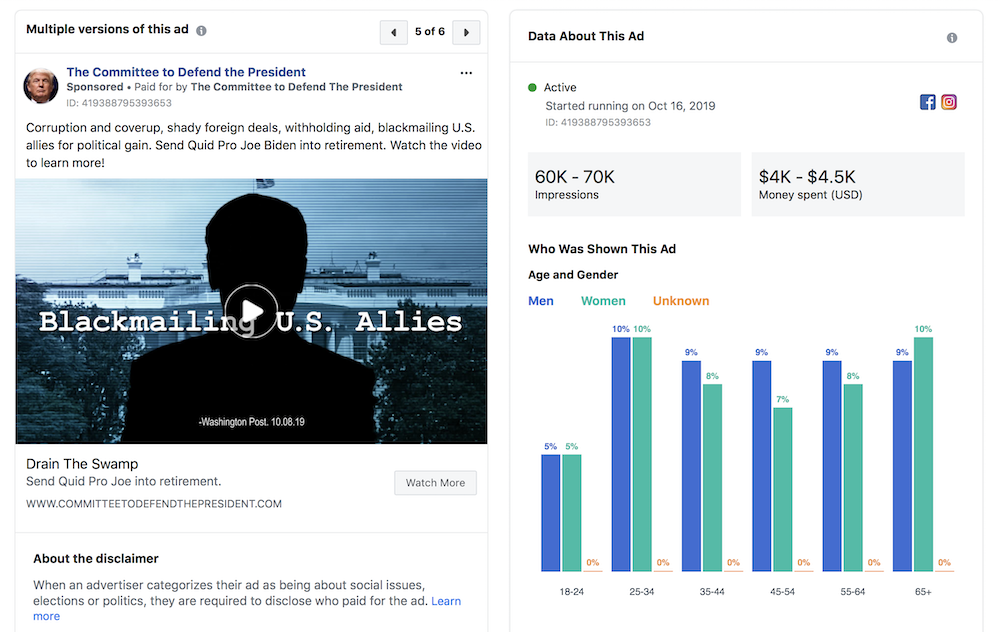

A version of a Trump campaign ad was taken down by the platform earlier this month because it used profanity, even though the same ad stated that Joe Biden promised Ukraine $1 billion if they fired the prosecutor investigating his son’s company, a claim that had been falsified by fact-checkers. When the campaign revised the ad removing the profanity, it was allowed to stay up.

However, a search this morning of the Facebook Ad Library found six versions of the ad as still active on the platform.

Why are political ads with misinformation being approved? Facebook argues that the platform exempts content or ads run by politicians from third-party fact checking and also considers their speech as newsworthy content which cannot be taken down even if it violates the community standards. Why? The platform argues that viewing this information is in the public interest. The platform will however remove or flag content that politicians share if it’s been previously debunked by fact-checkers, it claims.

The platform argues that it makes a “holistic and comprehensive” evaluation and considers the context in which the information is being shared, including whether there is an upcoming election, the nature of free speech in the country, whether there is an ongoing conflict or whether the country has a free press.

In other words, Facebook will take action against posts or ads by a politician if they could lead to “real world violence and harm,” Nick Clegg, the company’s VP of Global Affairs and Communications, said in a speech at the Atlantic Festival in Washington D.C. in September. For now, what constitutes harm is up to the platform to determine.

And while Facebook is allowed to determine what’s harmful or not – or true or not – it’s important to note that users can’t always. Whether or not Facebook changes its policies before the 2020 election, we’re in for a bumpy ride.

- Facebook continues to run political ads with misinformation - October 24, 2019

- How The Washington Post built a game to demonstrate how autonomous cars work - October 23, 2019