How The New York Times uncovered and visualized the dangers faced by child influencers

Content warning: This story includes descriptions of child sexual abuse.

More than half of Gen Z in the United States say they would like to be an influencer if given the chance. And many don’t wait until adulthood to pursue these aspirations.

Brand deals on social media have created a lucrative market, where young children’s lives are documented for profit. These influencers range from girls whose hobbies include dancing, modeling and competing in pageants, to girls as young as infants and toddlers chronicling their everyday lives. Instagram also doesn’t allow children under the age of 13 to make an account, so many accounts are managed by their mothers.

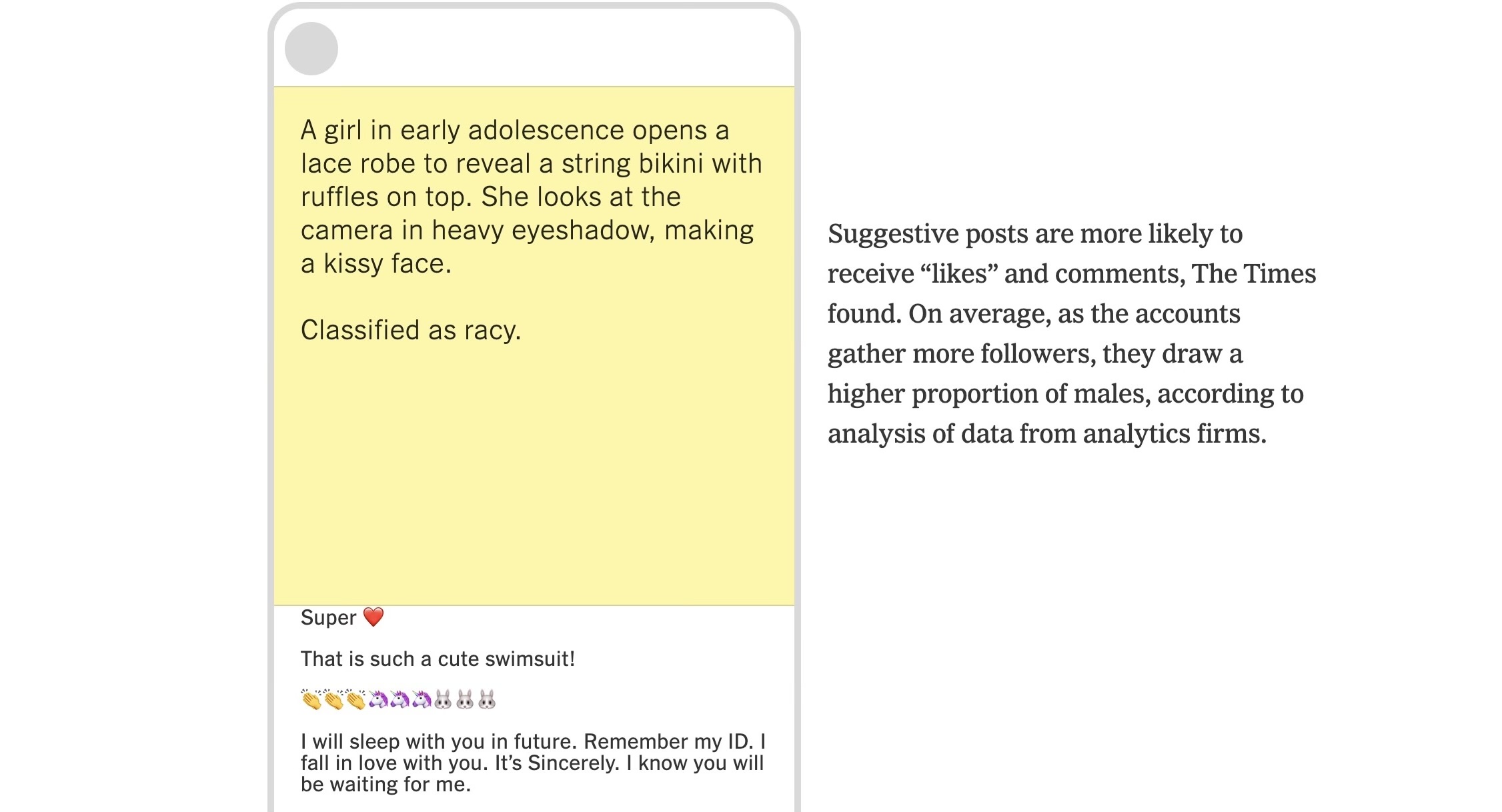

Unfortunately, child influencing doesn’t come with just fame and fortune. A New York Times multimedia investigative story, “A Marketplace of Girl Influencers Managed by Moms and Stalked by Men,” reveals a trend: As these young girls’ accounts gain followers, they also draw a higher proportion of men, many of whom flatter, bully or blackmail the moms and daughters for racier content. Some accounts even offer these male fans exclusive chat sessions and extra paid content via subscriptions.

In the story, Times journalists Jennifer Valentino-DeVries and Michael H. Keller explored data from over 5,000 Instagram accounts that feature young girls managed by their mothers. Using an image classification software from Google and Microsoft, The Times found that “suggestive” and “racier” posts are more likely to receive “likes” and comments from male followers. Through months of monitoring the messaging app Telegram, they also found that some men shared images and openly fantasized about sexually abusing girls featured in the accounts they follow.

Storybench sat down with Valentino-DeVries and Keller to talk about the conception of the story, some of the challenges they faced and how they visualized their data.

The following interview has been edited for length and clarity.

Where did the idea for this story come from?

Valentino-DeVries: Michael had worked on a series about child sexual abuse material and how it is proliferating online. We were looking for new ways to approach those types of harms. So, I just started looking up on different social networking platforms just to see like “tween influencer” or “pre-teen influencer,” because I didn’t even realize that that could be a thing. Quite a few accounts popped up and they were young girls in leotards or short skirts, high heels, clearly posing like adult influencers would pose. And they had things like Amazon wishlists and cash apps, ways to monetize their accounts. They said they were run by parents. And we were really surprised by this. This was right before the pandemic and got put on the backburner for a couple of years, but we were able to start looking at it again last year.

How did you decide to use the graphics and visuals that accompanied the story?

Keller: It’s a story about imagery, but it’s very challenging subject matter because we didn’t want to show any of the images, even though they’re posted publicly on Instagram, in the same way that we decided not to use first names of any of the parents or name any of the children. We thought it was still important to maintain that level of privacy for people and especially minors involved.

The graphic at the top of the story is about a photo, but it’s really about the interaction between the photography and the comments. The dynamic between these photos of children and the men who comment on them online is a big part of the story.

Aliza Aufrichtig [Times graphics and multimedia editor] and Rumsey Taylor [Times assistant editor] were the people in charge of the other graphic, which was a way for us to explain a bit of our process. We downloaded about 3 million images off of 2.1 million posts or so, and ran those images through Google and Microsoft classifiers to get a rating of whether they were “racy” or “suggestive” or not. We looked at where the two classifiers agreed just to give a conservative estimate. And then we were able to analyze it and found a correlation between their number of likes and comments.

It’s a pretty wordy, thorny process to describe. Whenever we have those kinds of speed bumps in our reporting, we think, “Okay, maybe this is better explained through a visual where we can both show something, because it is about Instagram and images, that made sense, but also walk step by step.”

Was there anything that surprised you during your reporting?

Keller: The initial sample of accounts that we saw seemed like, “All right, this is about sexualization of young kids online. And let’s explore if that’s the case of what’s happening in there.” And I think what we found was actually a lot darker than that. I did not expect there to be men reaching out to the parents and trying to blackmail them and trying to accuse them of creating explicit imagery.

It was a challenge in the reporting and writing too to try and figure out. We have this real spectrum of accounts and parents that have different experiences: How do we tell one story about it when there is this really deep part?

What has the response to the story been like?

Keller: I’m hearing both in reading the comments on the page and from other people that have reached out, parents — people that we didn’t talk to — that said, “I went through the same thing.” That’s always something we try to do with our reporting: We try to shed light on things that people don’t want to talk about because especially with these kinds of online offenders, when no one talks about it, they gain. The people that are operating in the shadows have a lot to gain from that silence.

If our reporting can prompt those discussions and make more people aware of the dangers and give people who have not felt like they can come out to their friends about it, the space to say, “Yes, this actually did happen, and here’s how you could protect yourself,” then that that’s a positive from all of the bad stuff that’s out there.

Valentino-DeVries: I think that in some ways exploring just how dark this can get and how some of these followers who you might not think are that bad – or you just don’t have proof that they’re bad followers – still can be extremely dangerous. I think that’s been really valuable: To get people talking about how they need to take even more steps than perhaps they thought, that things are more dangerous than they realized.