How the Wall Street Journal developed its make-your-own hedcut feature

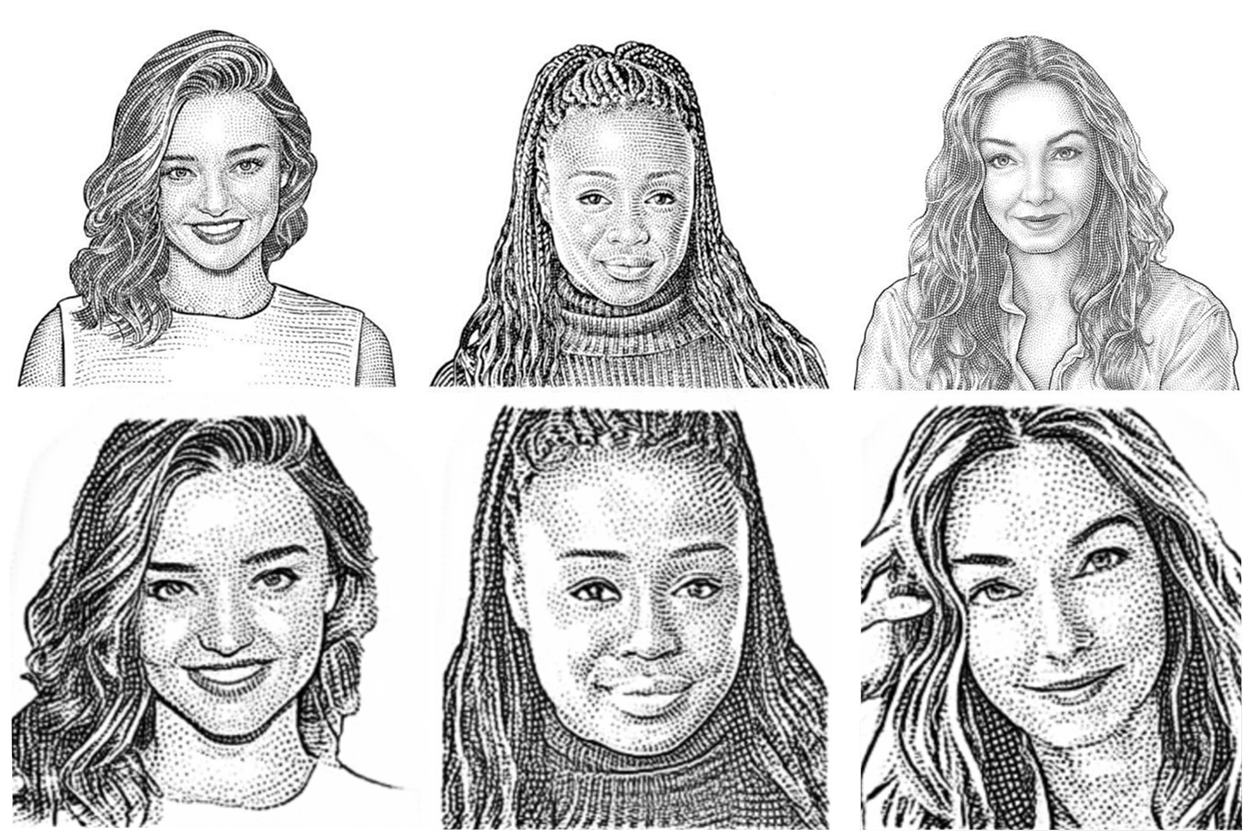

As of December 2019, the Wall Street Journal has been offering all members the opportunity to create their own portrait “hedcut,” a drawing made of dots and hatched lines. At the Journal, they typically use these hedcuts to depict notable subjects in stories or the journalists who wrote them, and they’re seen on the Journal’s daily feature, A-Hed. Machine learning scientist Eric Bolton and chief news strategist Louise Story spoke to Storybench about the process of figuring out the logistical details and who was involved, where the inspiration came from, and what kind of challenges they faced in bringing new technologies to boost an old newsroom practice.

Where did the inspiration come from to automate these hedcuts?

Bolton: The inspiration was kind of an idea that had been floating around. Someone else on the team had started working on it and was struggling to get convincing results. The idea is that we wanted to bring AI to WSJ traditions. That’s how it started out. We wanted to see if we could do anything convincing.

Story: These drawings have been with the Wall Street Journal since 1979. This year was actually the 40th anniversary. We have these artists here that make these drawings of well-known people and also, after you’ve been a reporter or editor here for a long time, it became an amazing privilege to get a hedcut.

This project speaks to how we are looking at our entire digital experience in a connected way across our teams and how we’re working across disciplines. I lead teams across a lot of different areas. I lead the R&D team, and we did the AI model over the past year. I also lead product design and engineering. When I’m with a group that is doing something cutting-edge that should become an ongoing part of our product experience, we’ll bring the teams together to do that. I also lead our content strategy, and I have reporters on my team and we’re looking at how to change the content at the Journal.

Often at a newsroom, you would see a cool feature and it would ride with a story and then it would flush away forever and we never see it again. But in this case, we made this feature, we ran some stories about it, but we also made it into our product experience. It’s built into our customer member center and it has now been incorporated into subscriber onboarding, so when people become Wall Street Journal subscribers, they also have the opportunity to make their hedcut. And if you look at our comment section, they all have profile pictures. That was pretty revolutionary in the way we structured things at the Journal. We’re not thinking about content separate from our news user experience. We integrated these things together so that we have things that have lasting impacts on our audience.

Can you tell me about each of your roles in this project?

Story: I joined the Journal in fall 2018 to work with Matt Murray, our editor in chief, leading our strategy. Broadly speaking, our strategy is trying to find Wall Street Journal traditions that are special and marrying that with the understanding of what our audience values. To do that, we are becoming a much more open, accessible, innovative and resonating place. This project is an important one because we take in a tradition and we’ve infused some technology and we’ve democratized it. It is no longer an elite thing. We’ve put our focus on community and our audience. We have become more open. When I came in and was working with Murray and other leaders here in strategy, it made a lot of sense to do this.

Bolton: I joined the Journal about five months ago as a machine learning scientist and that’s when this project started. I thought this was a great opportunity to apply machine learning skills. I’m the guy writing the code.

What was the goal of this project?

Bolton: We didn’t want it to be just a dotted picture of someone. We wanted it to feel like a drawing that had been made by a person. The other thing is we wanted it to be true to form.

The first batch of results that came out was these horrific looking drawings of people that the machine thought was a good idea of a stipple. We got some really weird outputs. During the exploratory phase, we used file transfer. You’re basically giving the network just one image for what a hedcut should look like. It’s randomly associating patterns from that image to a person’s face and the results was this creepy looking person with bulging eyes and hatched face. It would match the pattern in the hedcut drawings but it didn’t look like a very good drawing of the person.

How did you adapt to these challenges?

Bolton: Once we had the more improved model from the machine figured out, we got some pretty good results fairly quickly. They weren’t as good as what we saw today, but we felt comfortable going forward and saying this is something we could show to our members. We did some testing on the machine and worked for a while on it with a diverse group of people. It really struggled in particular with bald and bearded people. We just don’t have a lot of hand-drawn pictures of them – as much as people who have common hairstyles. These drawings take hours to produce. We can’t really say we’re gonna commit to doing more drawings of bearded people for our machine to train on. Instead my approach was: Why don’t we go through and label what we do have? That took about two days for me and an [intern] to go through and label each of the 2,000 images we had in order to see what kind of hairstyles they had. Then, we identified which are the least common ones and then told the machine to focus on those, and eventually we could get pretty convincing results for everyone. Also, throughout this testing phase we were constantly adding more photographs and drawings for reference for the machine. Then it was able to get a lot better, to the point where we felt comfortable releasing it to members.

Story: Everything we do going forward, we have to have people at the table with all different skillsets. That’s how we innovate. That’s how we change. And that’s how we grow. The skillsets for this project were data scientists, AI and machine learning experts, artists, engineers who could build the experience and tools that we use, designers and project managers to make that happen, reporters and editors to write stories about the tradition of this. So really all those people together made this project work.

Was there a defining moment where you felt comfortable?

Bolton: There was a defining moment. I remember my manager came up to me saying he was about to present it to Matt Murray, the editor-in-chief. He asked if we could get a really good example of someone who’s drawing was rendered really well. We tried a high definition photograph of Jennifer Lopez with perfect lighting and very professionally photographed, and it came out amazingly. So we showed that to Matt Murray. Eventually, we got it up on the membership page, but we just needed to pass that final muster. That moment was definitely defining. I wasn’t actually in the meeting but I heard about it afterwards.

What were some challenges you faced?

Bolton: There were also issues with skin color. That was obviously a big worry because we didn’t want any racial bias with our machine. We’ve been trying again and again to get a hedcut with a very low quality photo of Michael Jordan. It was a compilation of challenges to get this to work well. Eventually, it worked out and we were ready to go, and it was ready to start to send to a membership team.

Have the hedcuts had any impact on engagement or membership numbers?

Bolton: I hope so. Certainly a lot of people have been using it, which has been an interesting problem to deal with because the tool briefly went down the first day. Now we have all this data to turn to, but we have to be careful of how we store the data because we can’t store the image data for very long due to some legal obligations.

What has the response been like?

Story: Half of the people who came to the page where we had the hedcut tool actually made a hedcut. This is kind of amazing if you think about how much work it involves. You need to have a picture, then upload a picture, etc. People are really enthused about it.

Artists will still draw the hedcuts for journalists and for celebrities that appear in the newspaper. We are far from replacing the artist. I don’t think we’re anywhere close to that. The drawings the model produces don’t have that same quality. It’s just something to democratize it a little bit and make it something that anyone who is a part of the WSJ ecosystem can have this sort of badge of honor, even though it might not have been drawn by an artist. But honestly, the ultimate badge of honor will be having your own hand-drawn stipple.

To learn more about this project, read Bolton’s piece with Cynthia Hua on Medium: “Can a machine illustrate WSJ portraits convincingly?”

- How the Wall Street Journal developed its make-your-own hedcut feature - February 23, 2020

I tried to access aiportrait.wsj.com and the site is inactive.

Me too!!!

No luck accessing the site.

Can’t get the site to load even though it is advertised in today’s print WSJ edition?!??

The sentence “During the exploratory phase, we used file transfer” ought to be *style* transfer (not file transfer). See e.g. https://en.wikipedia.org/wiki/Neural_style_transfer