Deep fake learning: How a University of Washington AI game is teaching us to be better information consumers

Artificial intelligence, or AI, has the capacity to create images of people who have never existed in seconds. A LinkedIn connection, Facebook friend request, or dating profile now has the potential to carry an AI-generated photo.

Digitally altered videos called “deep fakes” have employed the likeness of presidents and celebrities so that they appear to spread messages they’ve never actually said, captivating the attention of media and raising concerns about the potential of AI to be used further by nefarious actors.

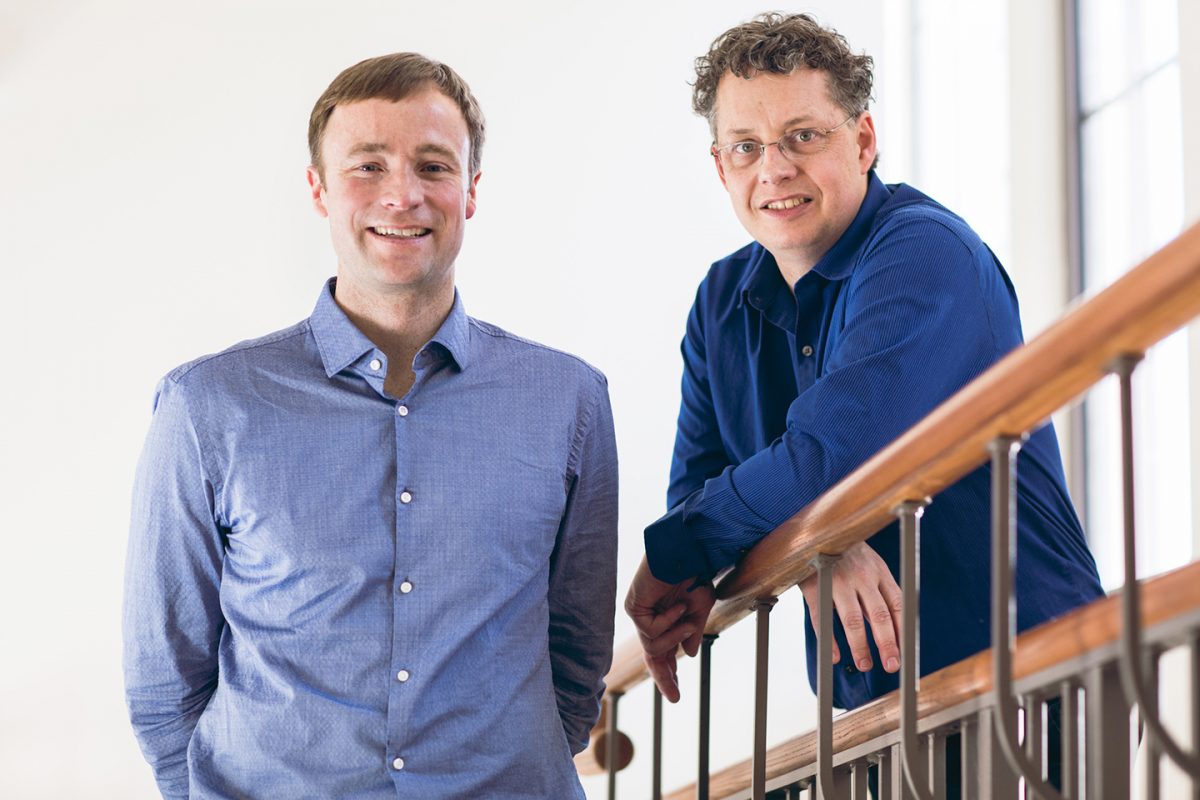

It’s becoming harder to trust the photos and videos that populate the Internet, but two University of Washington professors have created a website to make information consumers aware of the rapidly growing and improving technology that is AI.

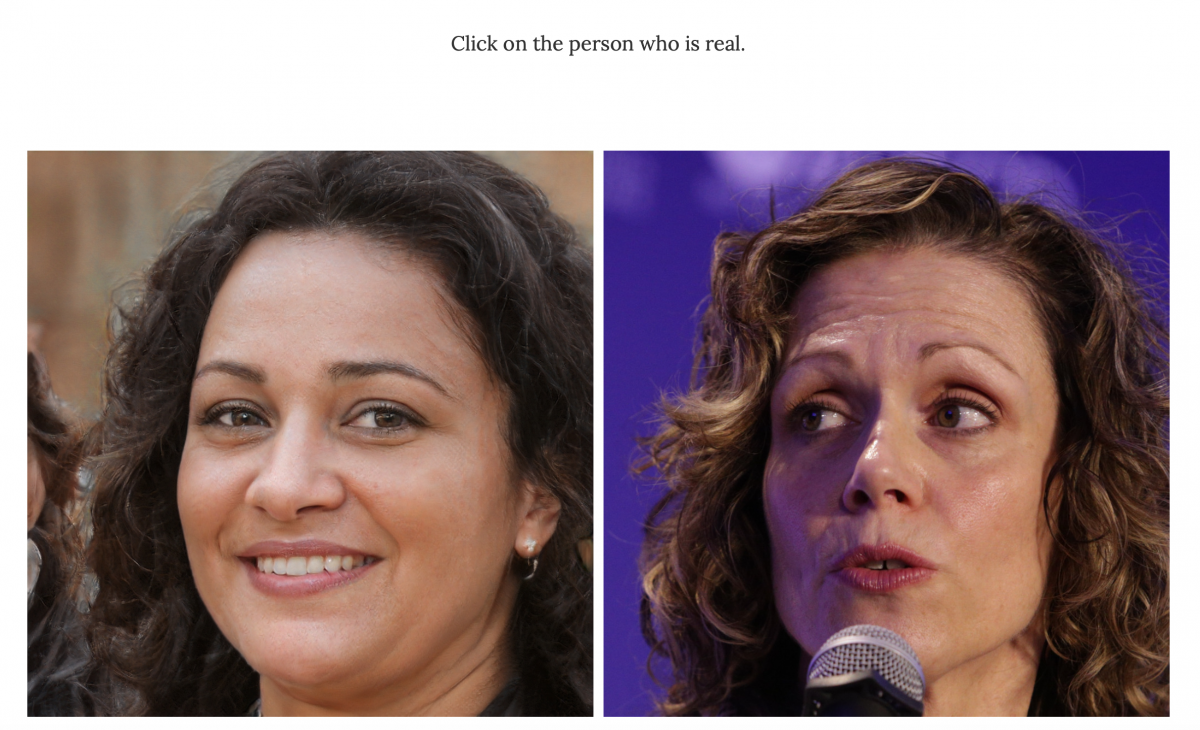

Dubbed “Which Face Is Real?”, the website’s main tool comes in the form of a game that presents real photos alongside those pulled from facial generation algorithm StyleGAN, and asks users to choose which image they believe is real.

Storybench spoke with Jevin West, associate professor in the Information School at UW who created the website alongside Professor Carl Bergstrom, to discuss the game’s goals, the site’s traffic, and his concerns about AI as a whole.

This interview has been edited for length and clarity.

The most dangerous time for a new emerging technology is when most of the public is not aware of it.

Jevin West, co-creator of Which Face Is Real?

What was the motivation behind creating this website?

The motivation to create this website wasn’t to teach information consumers all the skills to identify deep fakes, because it’s getting too hard to do that. I’ve seen thousands of these [AI-generated images], but there’s no way even I am able to tell what’s a deep fake and what’s not. So even researchers that work on this all the time can’t identify them because the technology is changing so fast.

The motivation was simply to bring public attention to this issue, so that the public knows the technology exists. And they can then question if they see something that sounds too good to be true or too bad to be true and say, “Wait a minute, did Biden or Trump really say that?”

The most dangerous time for a new emerging technology is when most of the public is not aware of it. So the idea was to create a game that’s kind of fun, but also kind of creepy so that it makes you aware and say, “Wow, this technology is so good that I can’t even tell a human from a nonhuman.”

How did you go about building the website?

Well, it’s pretty funny because my colleague Carl [Bergstrom] and I ended up building the website over a weekend. And we’ve had all these grand aspirations of adding new elements in the game, which hopefully we will at some point, because we have ways of making it more difficult. For example we could say choose between two fake and two real photos, because if we tell you one’s fake and one’s real, you have a 50% chance of choosing the right image. We can also add some new technology in there, like providing photos from new GAN-generated images, which are even better.

How much traffic has the website received?

We’ve had millions of plays since we posted the game, and we’ve noticed that users do get better when they play the game. And they look at different kinds of features like weird backgrounds, strange earrings, or people with three front teeth. There are some indications of when something is real or not, but it’s still very hard.

The website does have peaks in engagement, like when a class is talking about it and a bunch of students play it and share it with their friends or family. We see peaks when AI is in the news or if an article links to the game. We get these erratic kinds of peaks, but we get millions of plays, and that makes me really want to update it, so I plan on updating it very soon.

What are some of your biggest concerns about artificial intelligence as a whole?

Some of the biggest concerns I have change as the technology changes, which seems like daily. The thing that scares me the most right now is the ability to auto-generate text quickly through things like GPT-3. Images are scary, but when you can automate the creation of text at a very large scale very fast, that scares me even more. Because at least with an image you can look and see if this individual exists anywhere on the web. But auto-generating texts that are actually human-looking is pretty concerning.

Of course, the biggest concerns for me are the invasion of our privacy and the inability to tell whether images, videos, audio and now text are real or not. I think the other big concern that a lot of people have been talking about are the ways in which AI can be used to discriminate based on race and gender and other things like loans or whether you should be hired or not. There’s so many ways in which AI is supposedly more objective, and it’s not. And I certainly think that deep fakes are an area of concern especially in things like elections.

What is the message you want users to take away after visiting Which Face Is Real?

Just to walk away knowing that this technology exists. Even if you already knew and you just want to play the game, then maybe build some of the skills for identifying when something’s fake or not. Because it’s happening more and more often.

But I would say the biggest thing is just being aware that the technology exists. And if that’s already the case, then picking up some of the skills for what to look out for. And then make sure your friends and families know that some of these exist so that they don’t get duped.

Which Face Is Real is part of the larger Calling Bullshit project that aims to give people resources to dismantle misinformation — how does the tool fit into that endeavor?

It’s part of this bigger education effort to help us all become better information consumers in a world where it’s so easy to create content that can be misleading or is outright not real, like some of these images, or like a data graphic that’s affecting decision-making about news. It’s just trying to make us all better information consumers, because that’s hard even for us who teach it.

Is there anything else the public should know about this technology?

I think what journalists, educators and researchers can do is try to make the public aware of how fast these technologies are moving. And as long as they’re aware, at least they can question content that might not seem real or that seems wrong. Technology moves so darn fast that we need something to help us. And that’s part of what these games are —just a fun way to be aware of it.